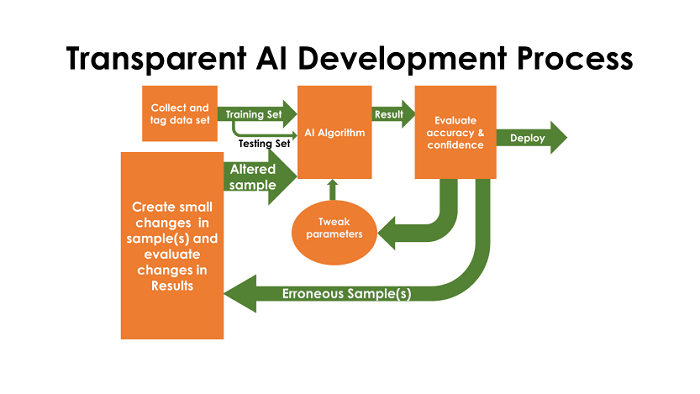

Historically, the issue of transparency comes down to one issue. Once an AI system is working, developers don’t know what’s going on inside. AI essentially becomes a black box with inputs and outputs. Developers can see that outputs usually correspond correctly to inputs, but they don’t know why. Then, when some erroneous output occurs, they can’t know why that occurs either. All of that makes such a system very difficult to improve.

Enter new programs which can analyze the workings of an AI to explain what’s going on. In their famous 2016 paper “Why Should I Trust You?” Explaining the Predictions of Any Classifier, [by Marco Tulio Ribeiro, Sameer Singh, and Carlos Guestrin], the authors present some surprising results. When a husky dog was misidentified by AI as a wolf, they probed the AI and learned that the reason for the mis-categorization was the snow in the image. Many of the wolf pictures in the training set also contained snow. As a result, the authors concluded that the AI had developed a bias that pictures with snow were likely to be wolves not dogs.

Ordinarily, you might think that directly probing the internals of the AI would be the way to resolve this issue, but that approach remains elusive. Instead, a program such as the open-source LIME used in this example makes small changes to the input image to see which changes alter the output. Ultimately, changing areas of snow to something else changed the result, while changing areas of the dog didn’t. That leads to a conclusion that the AI’s decision must have been based on the snow. With a program like LIME, many AI professionals are learning that AIs are often making decisions for reasons we didn’t expect. Regrettably, they are not making decisions for reasons of intelligence.

The Present State of AI

Given this current state of affairs, AI professionals are recognizing that today’s AI looks more and more like sophisticated statistical analysis, and less and less like actual intelligence. To get to next step on the road to genuine intelligence, consider Moravec’s paradox which can be paraphrased as: the simpler an intelligence problem seems, the more difficult it is to implement. And saying something is “so simple any three-year-old can do it” is the absolute kiss of death.

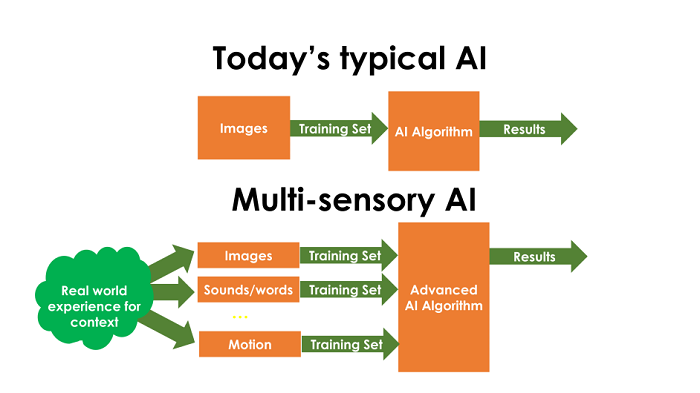

Consider that any child can learn a language from scratch. They can understand the passage of time and know the basic physics that round things roll and square things don’t. Kids recognize that all objects fall down not up, and understand cause-and-effect. How? Quite simply, children learn through multiple senses and through interaction with objects. In this way, a child has the advantage over AI in that he or she learns everything in the context of everything else. A child knows from playing with blocks that a stack must be built before it can fall down, and that blocks will never spontaneously stack themselves. This is in contrast to a dog, which might spontaneously do many things.

AI, of course, has none of this context. To AI, images of dogs or blocks are just different arrangements of pixels. Neither a program which is specifically image-based nor one which is primarily word-based will have the context of a “thing” which exists in a reality, is more-or-less permanent, and is susceptible to basic laws of physics.

In summary, improving AI transparency will show us how to improve our systems since we will be better able to identify and eliminate biases in our training sets. For the longer term, however, AI transparency is also highlighting the limitations of today’s AI approaches, opening the door to new algorithms and approaches to create true intelligence.

About the Author

Charles Simon, BSEE, MSCs is a nationally recognized entrepreneur and software developer who has many years of computer experience in industry including pioneering work in AI. Mr. Simon’s technical experience includes the creation of two unique Artificial Intelligence systems along with software for successful neurological test equipment. Combining AI development with biomedical nerve signal testing gives him the singular insight. He is also the author of Will the Computers Revolt?: Preparing for the Future of Artificial Intelligence, and the developer of Brain Simulator II, an AGI research software platform that combines a neural network model with the ability to write code for any neuron cluster to easily mix neural and symbolic AI code. More information on Charles Mr. Simon can be found at: https://futureai.guru/Founder.aspx

Sign up for the free insideAI News newsletter.

Speak Your Mind