I eagerly attended my 3rd GPU Technology Conference (GTC): “Deep Learning & AI Conference,” in Silicon Valley, March 23-26 as a guest of host NVIDIA. GTC has become my favorite tech event of the year due to its highly focused topic areas that align well with my own; data science, machine learning, AI, and deep learning; plus the show has an academic feel that I appreciate.

I eagerly attended my 3rd GPU Technology Conference (GTC): “Deep Learning & AI Conference,” in Silicon Valley, March 23-26 as a guest of host NVIDIA. GTC has become my favorite tech event of the year due to its highly focused topic areas that align well with my own; data science, machine learning, AI, and deep learning; plus the show has an academic feel that I appreciate.

NVIDIA CEO Jensen Huang delivered another one of his patented marathon keynote address which unveiled the company’s vision for the upcoming year. The company had to move the keynote’s location from the San Jose Convention Center to a very large hall at San Jose State University (complete with pedicabs provided by Kinetica). At around 2 hours and 40 minutes, Huang’s seamless and riviting keynotes are masterful with no notes or teleprompter used. He outlined a new moniker for all of NVIDIA’s GPU accelerated computing libraries – CUDA-X. As he does during each of his keynotes, Huang introduces a new term of the day, in this case it was PRADA for Programmable, Acceleration, Domains, Architecture.

I was not disappointed with my experience this year at GTC, and this Field Report hopefully gives you a sense for being there. To start, I found NVIDIA’s announcements to be far reaching and broad as usual, but this year there were a couple of specific technology areas that I enjoyed learning about.

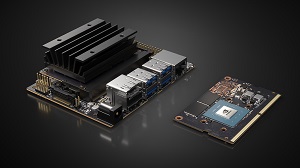

NVIDIA Jetson Nano family

Announced at the show was the Jetson Nano™, an AI computer that makes it possible to create millions of intelligent systems. The small but powerful CUDA-X™ AI computer delivers 472 GFLOPS of compute performance for running modern AI workloads and is highly power-efficient, consuming as little as 5 watts. Unveiled at the GPU Technology Conference by NVIDIA founder and CEO Jensen Huang, Jetson Nano comes in two versions — the $99 devkit for developers, makers and enthusiasts and the $129 production-ready module for companies looking to create mass-market edge systems. Jetson Nano supports high-resolution sensors, can process many sensors in parallel and can run multiple modern neural networks on each sensor stream. It also supports many popular AI frameworks, making it easy for developers to integrate their preferred models and frameworks into the product.

Also announced was the NVIDIA GPU-Accelerated Data Science Workstation designed to help millions of data scientists, analysts and engineers make better business predictions faster and become more productive. Purpose-built for data analytics, machine learning and deep learning, the systems provide the extreme computational power and tools required to prepare, process and analyze the massive amounts of data used in fields such as finance, insurance, retail and professional services. NVIDIA-powered workstations for data science are based on a powerful reference architecture made up of dual, high-end NVIDIA Quadro RTX™ GPUs and NVIDIA CUDA-X AI™ accelerated data science software, such as RAPIDS™, TensorFlow, PyTorch and Caffe. CUDA-X AI is a collection of libraries that enable modern computing applications to benefit from NVIDIA’s GPU-accelerated computing platform.

NVIDIA CEO Press Q&A

Members of the press had the opportunity to have an intimate chat (around 75 of us) with NVIDIA CEO Jensen Huang. During this hour long session, Huang answered a range of questions and stressed two items of key importance for this year’s GTC: ray tracing, and data science as the new HPC workload. I especially liked the 2nd item so I spent time at the conference drilling down on this message.

Members of the press had the opportunity to have an intimate chat (around 75 of us) with NVIDIA CEO Jensen Huang. During this hour long session, Huang answered a range of questions and stressed two items of key importance for this year’s GTC: ray tracing, and data science as the new HPC workload. I especially liked the 2nd item so I spent time at the conference drilling down on this message.

Huang also said a few words about the crypto-mining crash from late last year leeft NVIDAI with ‘excess’ inventory of their Pascal product. The sentiment was “it’s over!” Hopefully the company’s stock price will recover back to its pre-crash high of $290.

Huang also showed off his new sneakers that his daughter bought him (see inset photo).

Encounters and Chance Encounters

Attending GTC is always important to me as an opportunity for catching up with colleagues here at insideAI News. As a geographically distributed organization, conferences are a great way for the team to strategize in person about how to make our publication even better for our expanding readership.

Being at GTC is also great for chance encounters. Case in point, I was attending a session presented by H2O.ai Director of Research Jonathan McKinney. His session was just about wrapping and he was featuring some awesome active benchmark visualizations pitting CPUs vs. GPUs. It was overwhelmingly clear how much better GPUs are for ML/AI workloads. But time was running late and I had to rush off for my next interview with an industry exec. Just as I walked through the open door of the session room, lo-and-behold there was NVIDIA CEO Jensen Huang standing there watching the talk. He must have been quite pleased to see how Jon just knocked Intel out of the water with those benchmarks!

Sessions, Sessions, Sessions!

GTC 2019 had an overabundance of technical sessions to meet the needs of a very broad audience. After surveying the conference agenda, I found an interesting session for just about every time slot. Alas, I had a lot of meetings during my short time present and couldn’t check out all the sessions would have liked. Nevertheless, my two favorite sessions were:

- Implementing Machine Learning with GPUs by Jonathan McKinney of H2o.ai.

- Smart Active Analytical Applications: The Shift from Passive to Active Analytics by Irina Farooq, Chief Product Officer at Kinetica.

Bookstore by Digital Guru

A really nice touch offered by GTC is the on-site technical bookstore hosted by venerable technical books sales company DigitalGuru. The very accessible “store” was set-up just outside the Exhibition Hall. I chatted with the owner who recounted the days when DigitalGuru had a storefront in nearby Sunnyvale. Alas, Amazon decimated this jewel and nowadays the company only sells tech books by providing contract services at various technical conferences around the world. As the event business continues to grow, DigitalGuru continues to offer its services to conferences and other expositions, appearing at 35 conferences a year. The owner vets the selection of books very well for the specific event audience.

I always treat myself to one book each time I attend GTC. This time is was a great find: Practical Mathematical Optimization, by Jan A. Snyman, and Daniel N. Wilke. This is a second edition of a respected text on the subject that’s an integral part of machine learning and deep learning (e.g. gradient descent). This is not just another yellow book (Springer) to add to my already sizeable collection. It’s a detailed mathematical treatment of optimization theory. A new chapter was added to demonstrate the use of Python for programming optimization problems. There is even a code module containing all the algorithms discussed in the text. I had the opportunity to really dig into the text as my flight back to LA was delayed and I was sitting in a packed plane for over an hour waiting for takeoff. Thanks for saving my sanity DigitalGuru and GTC!

I always treat myself to one book each time I attend GTC. This time is was a great find: Practical Mathematical Optimization, by Jan A. Snyman, and Daniel N. Wilke. This is a second edition of a respected text on the subject that’s an integral part of machine learning and deep learning (e.g. gradient descent). This is not just another yellow book (Springer) to add to my already sizeable collection. It’s a detailed mathematical treatment of optimization theory. A new chapter was added to demonstrate the use of Python for programming optimization problems. There is even a code module containing all the algorithms discussed in the text. I had the opportunity to really dig into the text as my flight back to LA was delayed and I was sitting in a packed plane for over an hour waiting for takeoff. Thanks for saving my sanity DigitalGuru and GTC!

Academic Posters Galore

One of my favorite features of GTC is the academic flavor supported by the rows and rows of posters on the main concourse level. It spent a couple of hours staring at my favorite handful. Here is a gallery of all the posters submitted. Due to my previous life as a researcher in astrophysics, my favorite poster was “Deep Learning for Detecting Gravitational Waves.”

So Many Exhibitors, So Little Time!

As editor of insideAI News, attending tech conferences is my chance to have meaningfull face-to-face meetings with members of the big data ecosystem, and GTC had a large exhibition venue packed with companies. I’ve compiled my “Best of Show” list below of firms providing technology products and services that align well with our primary areas of focus: big data, data science, machine learning, AI and deep learning. I suggest you drill down on each to see what they have to offer.

ArrayFire develops accelerated computing software and deep learning enabled algorithms. The open source ArrayFire library is used by thousands of engineers globally in a broad range of technical computing applications.

Bonseyes is a collaborative community of entrepreneurs, researchers, investors, regulators and citizens. We are uncovering ways to build dependable AI solutions to solving industry challenges. Bonsey is transforming AI development from a cloud-centric model, dominated by internet companies, to an edge-centric model through a marketplace and an open AI laboratory.

Dasha is the AI platform that allows you to design, test and launch rich, human-level voice conversations. Automate your entire workflow and bring your costs down or sell your own solutions built on Dasha AI platform.

DataDirect Networks (DDN) is a leading at-scale storage supplier for data-intensive, global organizations. The DDN A³I® architecture allows organizations to deploy quickly, generate value and accelerate time to insight using AI and DL. A³I systems are high-performance parallel appliances that delivers data to applications with high bandwidth and low latency, ensuring full GPU resource utilization even with distributed applications running on servers like NVIDIA® DGX-1™ systems.

Determined AI enables Deep Learning applications to be built faster, cheaper, and more reliably. Our AutoML platform reduces time-to-market by increasing developer productivity, improving GPU utilization, and reducing risk. The Determined AI engineering team is comprised of machine learning and distributed systems experts from UC Berkeley and CMU.

Dotscience makes data science teams more productive, by enabling collaboration, flexible access to high performance compute, and version control. Their model governance and auditability tools ensure that every model data science teams build is suitable for deployment in highly regulated environments.

FASTDATA.io has created PlasmaENGINE® — the GPU-native stream and hyper-batch processing solution to fully leverage NVIDIA GPUs and Apache Arrow for true real-time processing of infinite data in motion over multiple nodes, with multiple GPUs, for unprecedented speed and performance.

Figure Eight’s platform combines human intelligence at scale with cutting-edge models to create the highest quality labeled data for your machine learning (ML) projects. You run your data to through Figure Eight’s platform and it provides the annotations, judgments, and labels you need to create accurate ground truth for your models.

From conception to production, Gradient° enables individuals and teams to quickly develop and collaborate on deep learning models. Join over a hundred thousand developers on the platform and enjoy 1-click Jupyter notebooks, pre-built templates, a python library and powerful low-cost GPUs.

Groupware Technology partners with leading vendors to build data and analytics solutions to service your needs from data to deployment.

H2O.ai is a leader in AI with its visionary open source platform, H2O. Its mission is to democratize AI starts by making training and interface faster, easier, and safer. With Auto Feature Engineering, AutoViz and Machine Learning Interpretability, H2O.ai’s Driverless AI is building trust in AI for all.

The Hewlett Packard Enterprise mission is to make AI real for customers. We make AI real by helping customers use Artificial Intelligence (AI) as a competitive advantage to help deliver business value. With a comprehensive AI portfolio of a powerful infrastructure, coupled with expert advice, and strong engineering partnerships, such as NVIDIA, HPE is a trusted leader in the AI revolution.

Iguazio’s platform powers data science from exploration to production, enabling data science teams to build and deploy more intelligent models faster. It provides pre-installed data science tools like Jupyter, Spark and Pandas, access to mutli-model fresh data, real-time performance and one-click deployment to production.

Kazuhm is a next generation workload processing platform that empowers companies to maximize all compute resources from desktop to server, to cloud.

Kinetica is the insight engine for the Extreme Data Economy. The Kinetica engine combines artificial intelligence and machine learning, data visualization and location-based analytics, and the accelerated computing power of a GPU database across healthcare, energy, telecommunications, retail, and financial services.

MapR Technologies, provider of the industry’s next generation data platform for AI and Analytics, enables enterprises to inject analytics into their business processes to increase revenue, reduce costs, and mitigate risks.

ORBAI develops revolutionary Artificial Intelligence (AI) technology based on our proprietary and patent-pending time-domain neural network architecture for computer vision, sensory, speech, navigation, planning, and motor control technologies that we license for applications in AI.

Pure Storage (NYSE: PSTG) helps innovators build a better world with data. Pure’s data solutions enable SaaS companies, cloud service providers, and enterprise and public sector customers to deliver real-time, secure data to power their mission-critical production, DevOps, and modern analytics environments in a multi-cloud environment.

SAS is a leader in analytics. Through innovative software and services, SAS empowers and inspires customers around the world to transform data into intelligence.

Scale AI is committed to accelerating the development of AI. Our suite of managed labeling services such as Sensor Fusion (For LiDAR and RADAR Annotation), 2D Box, 3D Cuboid, Semantic Segmentation, and Categorization combine manual labeling with best in class tools and machine driven checks to yield impeccable training data.

SigOpt empowers experts to build world-class models. By designing solutions that automate model optimization, SigOpt accelerates the impact of experimentation on machine learning, deep learning, simulation and other AI models.

SQream has redefined big data analytics with SQream DB, a complementary SQL data warehouse harnessing the power of GPU to enable fast, flexible, and cost-efficient analysis of massive datasets of terabytes to petabytes.

WekaIO Matrix™, the world’s fastest shared parallel file system, leapfrogs legacy storage infrastructures by delivering simplicity, scale, and faster performance for a fraction of the cost.

Contributed by Daniel D. Gutierrez, Managing Editor and Resident Data Scientist for insideAI News. In addition to being a tech journalist, Daniel also is a consultant in data scientist, author, educator and sits on a number of advisory boards for various start-up companies.

Contributed by Daniel D. Gutierrez, Managing Editor and Resident Data Scientist for insideAI News. In addition to being a tech journalist, Daniel also is a consultant in data scientist, author, educator and sits on a number of advisory boards for various start-up companies.

Sign up for the free insideAI News newsletter.

We are currently considering building a GPU-based real-time analytics infrastructure.

We aim to collect real-time data from existing legacy systems and to set an offer ranking that will be offered to customers by executing ML algorithm whenever data changes.

We are reviewing Iguazio and Kinetica, but there are not many cases in Korea, so it is difficult to get detailed information.

I wonder if you can compare the difference between the two solutions and the appropriate fields.