Years ago, building financial models for banks was exhaustive – this was back when model features were hand-crafted and approved by steering committees. This was pre-deep learning days, where AI was nowhere in sight. Since features were manual, extensive data quality checks were the norm. Analysts and associates in charge of regular audits would notice how data flowed from one system to another – many times it was ‘Garbage in, garbage out’.

Data quality back then was a big problem – the nature of data quality issues differ across data types like images/video, audio, text. There would always be that duplicate, outlier, missing data, corrupted text, or typo. But even after years of advances in data engineering and “artificial intelligence”, data quality, particularly structured tabular data, remains a big problem. In fact, it is a growing problem. But that is also why it is an exciting problem to solve.

Why Data Quality is a Growing Problem

More decisions are based on data

The growth of BI tools like Tableau, market intelligence tools like AppAnnie, and A/B testing tools like Optimizely all point to the trend of data-driven decisions. These tools are useless if the underlying data cannot be trusted. We lose trust in these tools fast if data quality issues keep popping up. Bad decisions are made and business opportunities lost. Gartner regularly surveys the cost of bad data and for years it remains at >$10 million per enterprise even after spending $200,000 annually on data quality tools.

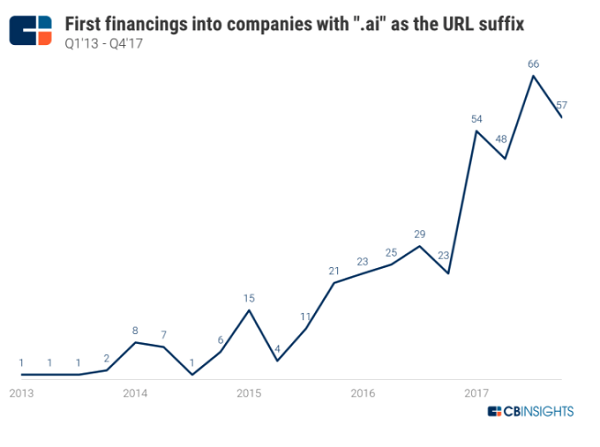

More companies are built on data

Waves of machine learning companies are built on data. If you’ve talked to machine learning engineers and data scientists, you’ll always hear how important good data is.

Why Data Quality Remains a Problem

Lots of data but static one-time checks is still the status quo

Cloud changed the mindset from storing only useful data to storing all potentially useful data. Streaming technologies like Kafka and cloud data warehouses like Snowflake lower the friction to store more data – at a higher velocity, wider variety, and larger volume. To ensure quality, data rules specifying the range and schema are manually written by consultants or data quality analysts. This is an expensive time-consuming process that is usually done only once. There are two problems with this. The first is that a majority of the rules are learned in production – when a problem surfaces. A case of unknown unknowns. The second is data is dynamic, requiring rules to be dynamic.

“Trusted” sources of data are still problematic

Three sectors have data quality top of mind more than any other: public sector, healthcare, and financial services. But these industries continue to be plagued with bad data. The data governance leader of a healthcare company once said that government data they receive monthly are littered with errors. Imagine, we are being governed based on dirty data and we need dedicated staff to clean it.

Specialization of data professionals

It used to be that data professionals covered more of the gamut of ingesting data, transforming, cleaning, and analyzing/querying it. Now we have different professionals tackling each step. Data engineers handle ingestion and transformation. Sometimes transformation is skipped and data is just pushed directly into data warehouses to be transformed later. Data scientists build models and are busy tuning deep learning knobs to improve accuracy. And business analysts query and write reports. They have more context of what the data should look like yet are separate from the data engineers who write and implement data quality checks.

Huge Opportunity Up for Grabs

The current data quality software market is almost $2 billion. But the real opportunity is much bigger at $4.5 billion since there is a large labor component that can be automated. There are 21,000 data quality roles in the US each paid $80,000 annually. This doesn’t capture those who work in professional services firms that have ‘consultants’ as job titles. Assuming this multiplies the direct labor component by 1.5x, the total labor component is $2.5B. The market will continue to grow healthily as data quality becomes more important in ML applications and business decisions.

While Informatica, SAP, Experian, Syncsort, and Talend currently dominate the market, a new wave of startups is propping up. Current products and how they are sold can be much better – from being a turnkey solution plugged into any part of the data pipeline, to not requiring a 6-month implementation period and 6-figure upfront commitments, to automating data quality rule writing and monitoring.

About the Author

Kenn So is an investor at Shasta Ventures. Kenn’s passionate about the potential of artificial intelligence (AI). While earning his MBA degree at Kellogg School of Management, Kenn started the Artificial Intelligence Club to help students understand how AI is changing the business landscape. He also led hackathons and pitch nights to bridge the gap between engineering and business students. Kenn is excited to see and work with companies that are building smart software products that leverage AI. Kenn grew up in the Philippines and lived across Asia. Raised in a family of restaurateurs, he is fond of family-run restaurants.

Sign up for the free insideAI News newsletter.

Speak Your Mind