Artificial Intelligence (AI) and Deep Learning (DL) represent some of the most demanding workloads in modern computing history as they present unique challenges to compute, storage and network resources. In this technology guide, insideAI News Guide to Optimized Storage for AI and Deep Learning Workloads, we’ll see how traditional file storage technologies and protocols like NFS restrict AI workloads of data, thus reducing the performance of applications and impeding business innovation. A state-of-the-art AI-enabled data center should work to concurrently and efficiently service the entire spectrum of activities involved in DL workflows, including data ingest, data transformation, training, inference, and model evaluation.

The intended audience for this important new technology guide includes enterprise thought leaders (CIOs, director level IT, etc.), along with data scientists and data engineers who are a seeking guidance in terms of infrastructure for AI and DL in terms of specialized hardware. The emphasis of the guide is “real world” applications, workloads, and present day challenges.

A³I – Accelerated, Any-scale AI Solutions

DDN’s A³I® (Accelerated, Any-Scale AI) optimized storage solutions break into new territory for AI and DL – enabling platforms that can maximize business value in terms of an optimized AI environment: applications, compute, containers, and networks.

Engineered from the ground up for the AI-enabled data center, A³I solutions provide acceleration for AI applications and streamlined DL workflows using the DDN shared parallel architecture. Flash can scale-up or scale-out independently from capacity layers, all within a single integrated solution and namespace. A³I solutions provide unmatched flexibility for your organization’s AI needs.

DDN A³I solutions are easy to deploy and manage, highly scalable in both performance and capacity, and represent a highly efficient and resilient platform for your present and future AI requirements. Below is a summary of the benefits of A³I solutions along with product summaries for the DDN AI200, AI400, AI7990.

DDN AI200® and AI400®

DDN’s AI200 and AI400 are efficient, reliable and easy to use data storage systems for AI and DL applications. With end-to-end parallelism, the AI200 and AI400 eliminate the bottlenecks associated with NFS-based platforms and deliver the performance of NVMe Flash directly to AI applications.

Both the AI200 and AI400 can scale horizontally as a single, simple namespace. They integrate tightly with hard disk tiers to help manage economics when data volumes expand. The AI200 and AI400 are specifically optimized to keep GPU computing resources fully saturated, ensuring maximum efficiency while easily managing challenging data operations, from non-continuous ingest processes to large scale data transformations.

DDN’s A³I reference architectures are designed in collaboration with NVIDIA® and HPE to ensure highest performance, optimal efficiency, and

flexible growth for NVIDIA DGX-1™ servers, NVIDIA DGX-2™ servers and HPE Apollo 6500 servers. Extensive testing with widely-used AI and DL applications demonstrates that a single AI200 provides tremendous acceleration for data preparation, neural network training and inference tasks using GPU compute servers.

DDN AI7990

DDN’s AI7990 is a hybrid flash and hard disk data storage platform designed for AI and DL workflows. With end-to-end parallel access to flash and deeply expandable hard disk drives (HDDs) storage, the AI7990 outperforms traditional NAS platforms and delivers the economics of HDD for expanding data repositories. Delivering flash performance direct to your AI application, the AI7990 brings new levels of simplicity and flexibility to help deal with unforeseen AI deployment challenges. AI7990 is faster, denser, more scalable and more flexible in deployment than other storage platforms with up to 23GB/s of filesystem throughput and over 750K IOPS in 4RU as a single namespace to meet demand. DDN flash and spinning disk storage is integrated tightly within a single unit for up to 1PB in just 4RU. As with the AI200/AI400 systems, the AI7990 keeps GPU servers saturated.

A³I with HPE Apollo 6500™

The DDN A³I scalable architecture fully-integrates HPE Apollo 6500 servers with DDN AI all flash parallel file storage appliances to deliver fully optimized, end-to-end AI and DL workflow acceleration. A³I solutions greatly simplify the use of the Apollo 6500 server while also delivering performance and efficiency for full GPU saturation, and high levels of scalability.

The HPE Apollo 6500 Gen10 server is an ideal AI and DL compute platform providing unprecedented performance supporting industry leading GPUs, fast GPU interconnect, high bandwidth fabric, and a configurable GPU topology to match workloads.

The following reference architectures are specifically designed for AI and DL. They illustrate tight integration of DDN and HPE platforms.

Every solution has been designed and optimized for Apollo 6500 servers, and thoroughly tested by DDN in close collaboration with HPE.

- Configuration for single Apollo 6500 server with AI200

- Configuration for single Apollo 6500 server with AI400

- Configuration for four Apollo 6500 servers with AI200

- Configuration for four Apollo 6500 servers with AI400

Performance testing on the DDN A³I architecture has been conducted with synthetic throughput and IOPs testing applications, as well as widely used DL frameworks. The results demonstrate that using the A³I intelligent client, applications can engage the full capabilities of the data infrastructure, and that the Apollo 6500 server achieves full GPU saturation consistently for DL workloads.

EXA5 (EXAScaler® Storage)

EXA5 provides deeper, more powerful integrations into AI and HPC ecosystems through simpler implementation and scaling models, easier visibility into workflows and powerful global data management features.

EXA5 allows for scaling either with all NVMe flash or with a hybrid approach, offering flexible scaling according to needs with the performance of flash or the economics of HDDs. Compatible with DDN’s A³I storage solutions, which are factory preconfigured and optimized for AI, deployment time is reduced for an AI-ready data center. Together they provide full benchmarking, documentation and qualification with AI and GPU environments.

A primary feature of EXA5 is STRATAGEM, DDN’s unique integrated policy engine. STRATAGEM enables platforms for transparent flash tiering for high performance and efficiency. Featuring built-in fast and efficient data management, users can manage most active data to scale-out flash tiers from scale-out HDD tiers. It also automatically controls free space on flash, quickly responding to changing demands and efficiently scanning storage devices directly to find target files. Other STRATAGEM features include:

- Built-in fast and efficient data management

- Manage most active data into scale-out Flash

- Automatic control of free space on Flash

- Quickly respond to changing demand

- Efficient namespace scanning

EXA5 also includes small file performance enhancements (useful for the mixed workloads of unstructured data), along with integrated end-to-end data integrity verification which verifies data from application to media.

DDN EXAScaler Storage is now a new service in Google Cloud Marketplace (GCP) that’s suited for dynamic, pay-as-you-go applications, from rapid simulation and prototyping to cloud bursting peak HPC workloads. AI and analytics in the cloud can now benefit from EXAScaler performance and capabilities.

A³I Solutions for NVIDIA DGX: DGX-1 and DGX-2

DDN A³I solutions enable and accelerate data pipelines for AI and DL workflows of all scale running on DGX servers. They are designed to provide high performance and capacity through a tight integration between DDN and NVIDIA platforms. Every layer of hardware and software engaged in delivering and storing data is optimized for fast, responsive and reliable file access.

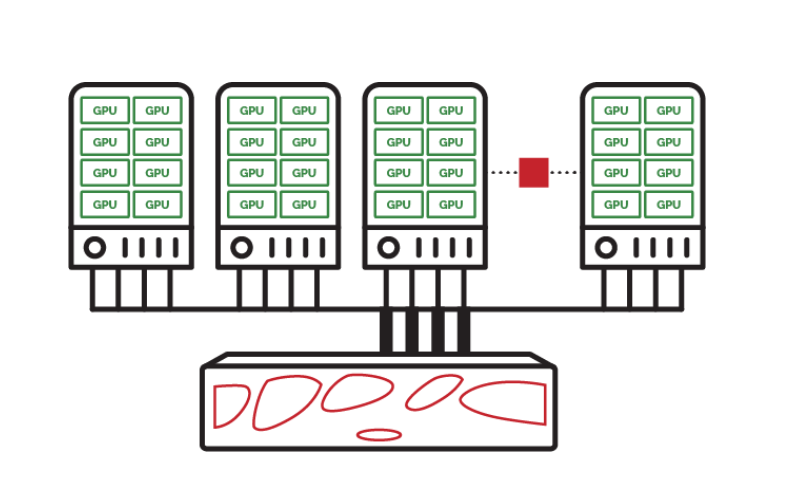

The DDN architecture provides highly optimized parallel data paths to AI and DL applications running on DGX servers. The following reference architectures are specifically designed for AI and DL. They illustrate tight integration of DDN and NVIDIA platforms. Every solution has been designed and optimized for DGX servers, and thoroughly tested by DDN in close collaboration with NVIDIA:

- Configuration for a single DGX-1/DGX-2 server with AI200

- Configuration for a single DGX-1/DGX-2 server with AI400

- Configuration for four DGX-1 servers with AI200

- Configuration for four DGX-1 servers with AI400

- Configuration for nine DGX-1 servers (DGX-1 POD) with AI200

- Configuration for nine DGX-1 servers (DGX-1 POD) with AI400

- Configuration for three DGX-2 servers (DGX-2 POD) with AI200

- Configuration for three DGX-2 servers (DGX-2 POD) with AI400

In terms of performance, the DDN A³I architecture provides high-throughput and low-latency data delivery for DL frameworks on the DGX-1 server. Extensive interoperability and performance testing has been completed using popular DL frameworks, notably TensorFlow, Horovod, Torch, PyTorch, NVIDIA TensorRT, Caffe, Caffe2, CNTK, MXNET, Theano. The figure below illustrates the GPU utilization and read activity from the AI200 storage appliance. The GPUs achieve maximum utilization, and the AI200 storage appliance delivers a steady stream of data to the application during the training process.

DDN is continuously engaged in developing technologies which enable breakthrough innovation and research in a broad range of use cases. Customers around the world are using DDN systems to expand their business, deliver innovation, and bring about social change that benefits everyone. The list below includes compelling use cases involving A³I solutions coupled with DGX servers to maximize the value of customer data and easily and reliably accelerate time to insight:

- AI powered retail checkout platform for video capture, processing and retention

- Real-time microscopy workflows

- AI platform for autonomous vehicle development

- Autonomous vehicle development in vehicle

- Clinical trail data collection, consolidation and analysis

This is the second in a series appearing over the next few weeks where we will explore these topics surrounding data platforms for AI & deep learning:

- Introduction, How Optimized Storage Solves AI Challenges

- A³I – Accelerated, Any-scale AI Solutions

- Frameworks for AI and DL Workflows

- Partners Important Role for Leading-Edge Case Studies, Summary

If you prefer, the complete insideAI News Guide to Optimized Storage for AI and Deep Learning Workloads is available for download from the insideAI News White Paper Library, courtesy of DDN.

Speak Your Mind