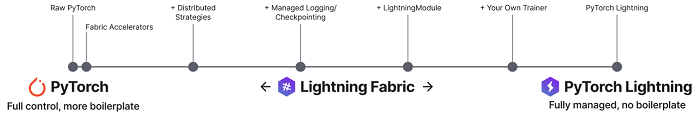

Lightning AI, the company accelerating the development of an AI-powered world, today announced the general availability of PyTorch Lightning 2.0, the company’s flagship open source AI framework used by more than 10,000 organizations to quickly and cost-efficiently train and scale machine learning models. The new release introduces a stable API, offers a host of powerful features with a smaller footprint, and is easier to read and debug. Lightning AI has also unveiled Lightning Fabric to give users full control over their training loop. This new library allows users to leverage tools like callbacks and checkpoints only when needed, and also supports reinforcement learning, active learning and transformers without losing control over training code.

The new releases are available for download at https://lightning.ai/pages/open-source/. Users seeking a simple, scalable training method that works out of the box can use PyTorch Lightning 2.0, while those looking for additional granularity and control throughout the training process can use Lightning Fabric. By extending its portfolio of open source offerings, Lightning AI is supporting a wider range of individual and enterprise developers as advances in machine learning are growing exponentially.

Until now, machine learning practitioners have had to choose between two extremes: either using proscriptive tools for training and deploying machine learning tools or figuring it out completely on their own. With the update to PyTorch Lightning, and the introduction of Lightning Fabric, Lightning AI is now offering users an extensive array of training options for their machine learning models. If users want features like checkpointing, logging, and early stopping to be included out of the box, the original PyTorch Lightning framework is the right choice for them. If users want to scale their model to multiple GPUs but also want to write their own training loop for tasks like reinforcement learning, a method used by OpenAI’s ChatGPT, then Fabric is the right choice for them.

“The dual launch of PyTorch Lightning 2.0 and Lightning Fabric marks a significant milestone for Lightning AI and our community,“ said William Falcon, creator of PyTorch Lightning and the CEO and co-founder of Lightning AI. “Each release provides a robust set of options for companies with in-house machine learning developers interested in additional granularity, and those who want a simple scaling solution that works out of the box. As the size of models deployed in enterprise settings continues to expand, the ability to scale the training process in a way that is simple, lightweight, and cost-effective becomes increasingly important. Lightning AI is proud to be expanding the choices that users have to scale their models for downstream tasks whether those are internal, like analytics and content creation tools, or customer-facing, like ChatGPT and other customer service chatbots.”

Sign up for the free insideAI News newsletter.

Join us on Twitter: https://twitter.com/InsideBigData1

Join us on LinkedIn: https://www.linkedin.com/company/insidebigdata/

Join us on Facebook: https://www.facebook.com/insideAI NewsNOW

Speak Your Mind