We’re seeing a rising number of new books on the mathematics of data science, machine learning, AI and deep learning, which I view as a very positive trend because of the importance for data scientists to understand the theoretical foundations for these technologies. In the coming months, I plan to review a number of these titles, but for now, I’d like to introduce a real gem: “Intelligence Engines: A Tutorial Introduction to the Mathematics of Deep Learning,” by James V. Stone, 2019 Sebtel Press. Dr. Stone is an Honorary Reader in Vision and Computational Neuroscience at the University of Sheffield, England.

The author provides a GitHub repo containing Python code examples based on the topics found in the book. You can also download Chapter 1 for free HERE.

The main reason why I like this book so much is because of its tutorial format. It’s not a formal text on the subject matter, but rather a relatively short and succinct (only 200 pages) guide book for understanding the mathematical fundamentals of deep learning. I was able to skim it’s content in about 2 hours, and a thorough reading could be achieved in a few days depending on your math background. I’m working on a deep dive now, with note pad and pencil in hand, as I see the book providing a timely refresh of the math I first saw in grad school. What you gain in the end is a well-balanced formulation for how deep learning works under the hood. Data scientists can “get by” without the math when working with deep learning, but much of the process becomes guess work without the insights that the math brings to the table.

The book includes the following chapters:

- Artificial Neural Networks

- Linear Associative Networks

- Perceptrons

- The Backpropagation Algorithm

- Hopfield Nets

- Boltzmann Machines

- Deep RBMs

- Variational Autoencoders

- Deep Backprop Networks

- Reinforcement Learning

- The Emperor’s New AI?

In each chapter, you’ll find all the foundational mathematics to support the topic. You’ll need to know some basic Calculus, linear algebra, and partial different equations to move forward, but Stone include several useful Appendices to help you along: mathematical symbols, a vector and matrix tutorial, maximum likelihood estimation, and Baye’s Theorem.

The chapters also feature important algorithms presented in easy to understand algorithm pseudo-code (there is no standard in this regard, but Stone’s rendition is very straightforward). One big feature of the book is its complete bibliography of seminal books and papers that have contributed to the advance of deep learning over the years. The book gives a historical perspective for how deep learning evolved in decades past, coupled with important papers along the way. Using this book as a guide, you’ll have a complete and detailed roadmap for deep learning and the long, often winding path it has taken.

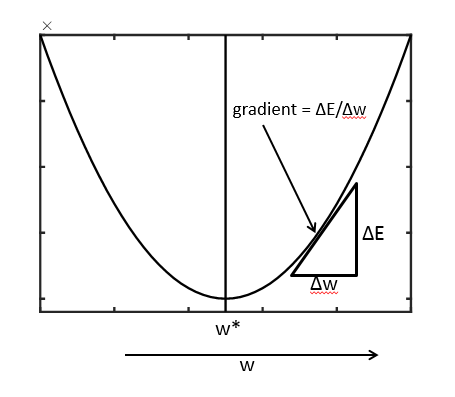

I especially like Stone’s treatment of gradient descent, and back propagation. If you’ve ever been confused about these building blocks of deep learning, this book’s tutorial on these subjects will give you a nice kick-start.

Topics like Hopfield Nets and Boltzmann Machines are included to provide a historical lineage. Historically, Hopfield Nets (circa 1982) preceded backprop networks. The Hopfield net is important because it is based on the mathematical apparatus of a branch of physics called statistical mechanics, which enabled learning to be interpreted in terms of energy functions. Hopefield nets led directly to Boltzmann machines, which represent an important stepping stone to modern deep learning systems. In this respect, it’s useful to see how deep learning has grown into its current state.

I would recommend this tutorial to any data scientist wishing to get quickly up to speed with the foundations of arguably the most important technology discipline today. The best time to move ahead with your education is now with this great resource!

Contributed by Daniel D. Gutierrez, Editor-in-Chief and Resident Data Scientist for insideAI News. In addition to being a tech journalist, Daniel also is a consultant in data scientist, author, educator and sits on a number of advisory boards for various start-up companies.

Sign up for the free insideAI News newsletter.

Speak Your Mind