In this article, we’ll dive into the newly announced oneAPI, a single, unified programming model that aims to simplify development across multiple architectures, such as CPUs, GPUs, FPGAs and other accelerators. The long-term journey is represented by two important first-steps – the industry initiative and the Intel beta product.

oneAPI: – A Unified Cross-Architecture, High Performance Programming Model Designed to Help Shape the Future of Application Development

Interview: Terry Deem and David Liu at Intel

I recently caught up with Terry Deem, Product Marketing Manager for Data Science, Machine Learning and Intel® Distribution for Python, and David Liu, Software Technical Consultant Engineer for the Intel® Distribution for Python*, both from Intel, to discuss the Intel® Distribution for Python (IDP): targeted classes of developers, use with commonly used Python packages for data science, benchmark comparisons, the solution’s use in scientific computing, and a look to the future with respect to IPD.

Develop Multiplatform Computer Vision Solutions with Intel® Distribution of OpenVINO™ Toolkit

Realize your computer vision deployment needs on Intel® platforms—from smart cameras and video surveillance to robotics, transportation, and much more. The Intel® Distribution of OpenVINO™ Toolkit (includes the Intel® Deep Learning Deployment Toolkit) allows for the development of deep learning inference solutions for multiple platforms.

The AI Opportunity

The tremendous growth in compute power and explosion of data is leading every industry to seek AI-based solutions. In this Tech.Decoded video, “The AI Opportunity – Episode 1: The Compute Power Difference,” Vice President of Intel Architecture and AI expert Wei Li shares his views on the opportunities and challenges in AI for software developers, how Intel is supporting their efforts, and where we’re heading next.

Fast-track Application Performance and Development with Intel® Performance Libraries

Intel continues its strident efforts to refine libraries optimized to yield the utmost performance from Intel® processors. The Intel® Performance Libraries provide a large collection of prebuilt and tested, performance-optimized functions to developers. By utilizing these libraries, developers can reduce the costs and time associated with software development and maintenance, and focus efforts on their own application code.

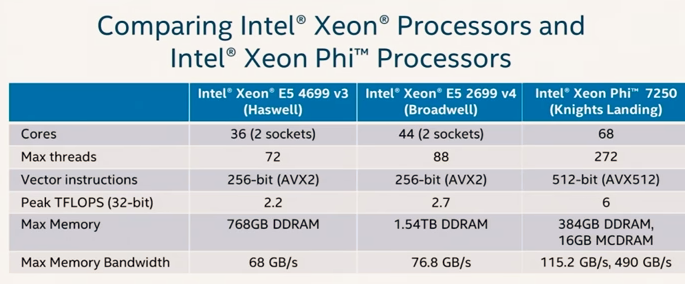

Intel’s New Processors: A Machine-learning Perspective

Machine learning and its younger sibling deep learning are continuing their acceleration in terms of increasing the value of enterprise data assets across a variety of problem domains. A recent talk by Dr. Amitai Armon, Chief Data-Scientist of Intel’s Advanced Analytics department, at the O’reilly Artificial Intelligence conference, New-York, September 27 2016, focused on the usage of Intel’s new server processors for various machine learning tasks as well as considerations in choosing and matching processors for specific machine learning tasks.

Building Fast Data Compression Code for Cloud and Edge Applications

Finding efficient ways to compress and decompress data is more important than ever. Compressed data takes up less space and requires less time and network bandwidth to transfer. In this article, we’ll discuss the data compression functions and the latest improvements in the Intel® Integrated Performance Primitives (Intel® IPP) library.

Solutions for Autonomous Driving – From Car to Cloud

From car to cloud―and the connectivity in between―there is a need for automated driving solutions that include high-performance platforms, software development tools, and robust technologies for the data center. With Intel GO automotive driving solutions, Intel brings its deep expertise in computing, connectivity, and the cloud to the automotive industry.

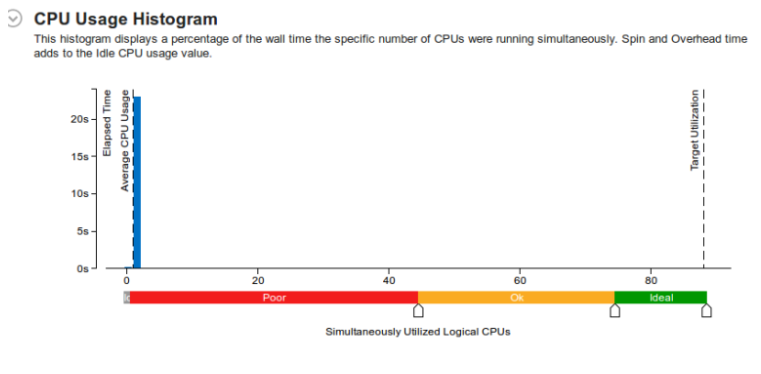

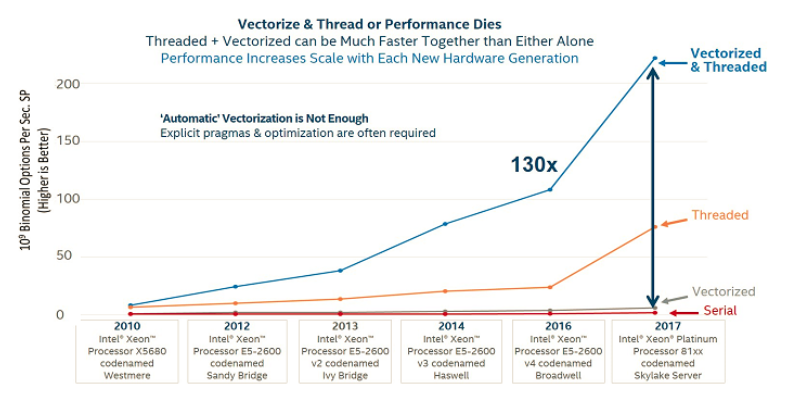

The Importance of Vectorization Resurfaces

Vectorization offers potential speedups in codes with significant array-based computations—speedups that amplify the improved performance obtained through higher-level, parallel computations using threads and distributed execution on clusters. Key features for vectorization include tunable array sizes to reflect various processor cache and instruction capabilities and stride-1 accesses within inner loops.

Julia: A High-Level Language for Supercomputing and Big Data

Julia is a new language for technical computing that is meant to address the problem of language environments not designed to run efficiently on large compute clusters. It reads like Python or Octave, but performs as well as C. It has built-in primitives for multi-threading and distributed computing, allowing applications to scale to millions of cores. In addition to HPC, Julia is also gaining traction in the data science community.