I recently caught up with Terry Deem, Product Marketing Manager for Data Science, Machine Learning and Intel® Distribution for Python, and David Liu, Software Technical Consultant Engineer for the Intel® Distribution for Python*, both from Intel, to discuss the Intel® Distribution for Python (IDP): targeted classes of developers, use with commonly used Python packages for data science, benchmark comparisons, the solution’s use in scientific computing, and a look to the future with respect to IPD. This Q&A is a follow-up to a previous sponsored post, “Supercharge Data Science Applications with the Intel® Distribution for Python.”

Terry specializes in developer tools and the developer community. Terry has covered a wide variety of tools for Intel from the highly popular XDK to the industry-standard Media Server Studio. He currently covers Intel’s machine learning tool such as Intel® Data Analytics Acceleration Library (Intel® DAAL), Intel® Math Kernel Library for Deep Neural Networks (Intel® MKL-DNN) and Intel Distribution for Python.

David specializes in Python* and Machine Learning applications and open-source software development. David has represented the Intel® Distribution for Python* and other Python-based projects at Intel and does much of the community work in the Python and SciPy ecosystem.

insideAI News: Hello Terry and David! Let’s talk about the Intel® Distribution for Python*. Can you tell our readers who will benefit most from this distribution?

Terry Deem: The Intel® Distribution for Python is best suited for the needs of data scientists, data engineers, deep learning practitioners, scientific programmers, and HPC developers—really, any Python programmer in these fields. Because it supports SciPy, NumPy and scikit-learn, it supports the needs of this breadth of developers really well. They’ll want to use the accelerated version of scikit-learn, rather than the stock scikit-learn. With this accelerated version, we can demonstrate orders of magnitude performance increases in some cases, without changing any code. If these developers are using the packages in stock format, they’re really leaving performance on the table.

insideAI News: Yes, I know that many of our readers use Python, so this accelerated solution should be of great interest. Actually, this leads me to the next question, about commonly used Python packages. It is said that of data scientists who use Python, 90% use Pandas for data transformation tasks. How well does the Intel Distribution for Python perform with such packages?

David Liu: There are two places where you can obtain these accelerations in code. For Pandas, you have NumPy* accelerations; Pandas is composed on top of *NumPy and inherits its accelerations. We’ve also developed an open source technology, the High Performance Analytics Toolkit (HPAT), which compiles the Pandas calls utilizing Numba. HPAT enables acceleration beyond the limitations of stock Pandas by bypassing the Global Interpreter Lock (GIL) in Python for computation.

Terry Deem: And HPAT is really simple to use. You basically just have one line of code that you add to your existing code and away you go.

insideAI News: Our readers love benchmarks, i.e. speed comparison for different solutions. Can you comment on benchmarks for the Intel Distribution for Python? Do these benchmarks include any typical use cases often performed in a data science pipeline?

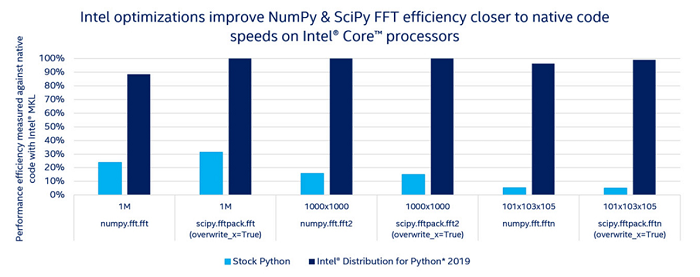

David Liu: Yes, we’ve developed extensive benchmarks for Intel Distribution for Python, such as the ibench benchmark, which I helped create. With this benchmark, you can actually trace every one of the individual calls, such as NumPy’s dot()function, an FFT or a convolution. Using ibench, you can view each of those simple connects as isolated function definitions, along with the benchmarking. ibench runs the test for each, and then gives you the runtimes. You can then use this data to test against stock Python and our distribution. This is the source of many of the website benchmarks as well. ibench allows for a simplified benchmarking case where you can do A/B testing with relative times.

insideAI News: My next question has to do with scientific computing. I know that Python’s NumPy* and SciPy* packages are the heart of scientific computing with Python*. Can you speak to Intel’s work in Python for scientific computing?

David Liu: In the case of NumPy and SciPy, there are a number of important optimizations for scientific functions. The biggest one is transcendental functions, which is where many of the big vectorization capabilities come through. We’ve done a lot of optimization of numpy.dot (), FFTs, Lower/Upper Decomposition (LU), and QR factorization. And we did some optimization on memory management and vectorization engine management to get the majority of the calls to work.

We really look at two forms of acceleration—vectorization scaling and core scaling. The concept is that the new additional types of vectorizations in Intel® Advanced Vector Extensions 512 (Intel® AVX-512) can give you one set of advantages. The next set of advantages will come from core scaling. The concept underlying core scaling is that if you increase the number of cores that are available to scale, your workload will get faster as you add more cores to it. So, it’s important that we address both vectorization and core scaling.

insideAI News: As you look forward using your proverbial crystal ball, can you give us insight into the roadmap for the Intel Distribution for Python?

Terry Deem: I’m looking forward to the next release, due out soon. It will have updated algorithms for the Intel® Data Analytics Acceleration Library and daal4py, the data science-first Python package to the library.

David Liu: Yes, there are additional algorithms and new compute modes available for them, specifically distributed and streaming, in this next release. One of the things that we’re also looking into is optimizing Python for Intel’s latest hardware, such as our FPGAs and accelerators. We’re looking at how we take our great portfolio of hardware—both existing and in the future—and ensure that users get the most out of it.

insideAI News: Is there anything else you’d like to add that our highly focused audience might like to hear about?

Terry Deem: I think the Intel Distribution for Python offers incredible performance and ease of use for a breadth of developers, data scientists and scientific computing professionals, without the need for them to rewrite their algorithms or code or get away from what they want to do. We understand that they don’t want to sit down, tune code and try to figure it out—they’d rather get the results. The value of Intel Distribution for Python is that they can download and start using it right away and get the performance that was just sitting there hidden inside their hardware all this time. Performance they didn’t even know they had because their code wasn’t using the fast instruction set in the CPU. They can get results overnight by using this simple technique.

David Liu: Another important point is reproducibility. That’s something that we care about. We ensure reproducibility, while delivering performance. I think our commitment to open source and our commitment to doing what is scientifically correct and achieving true reproducibility is of great importance.

Want to learn more? Download Intel® Distribution for Python* today >

Speak Your Mind