Algorithmiq, a scaleup developing quantum algorithms to solve the most complex problems in life sciences, has successfully run one of the largest scale error mitigation experiments to date on IBM’s hardware. This achievement positions them, with IBM, as front runners to reach quantum utility for real world use cases. The experiment was run with Algorithmiq’s proprietary error mitigation algorithms on the IBM Nazca, the 127 qubit Eagle processor, using 50 active qubits x 98 layers of CNOTS and thus a total of 2402 CNOTS gates. This significant milestone for the field is the result of a collaboration between the two teams, who joined forces back in 2022 to pave the way towards achieving first useful quantum advantage for chemistry.

IBM Launches $500 Million Enterprise AI Venture Fund

IBM (NYSE: IBM) today announced that it is launching a $500 million venture fund to invest in a range of AI companies – from early-stage to hyper-growth startups – focused on accelerating generative AI technology and research for the enterprise.

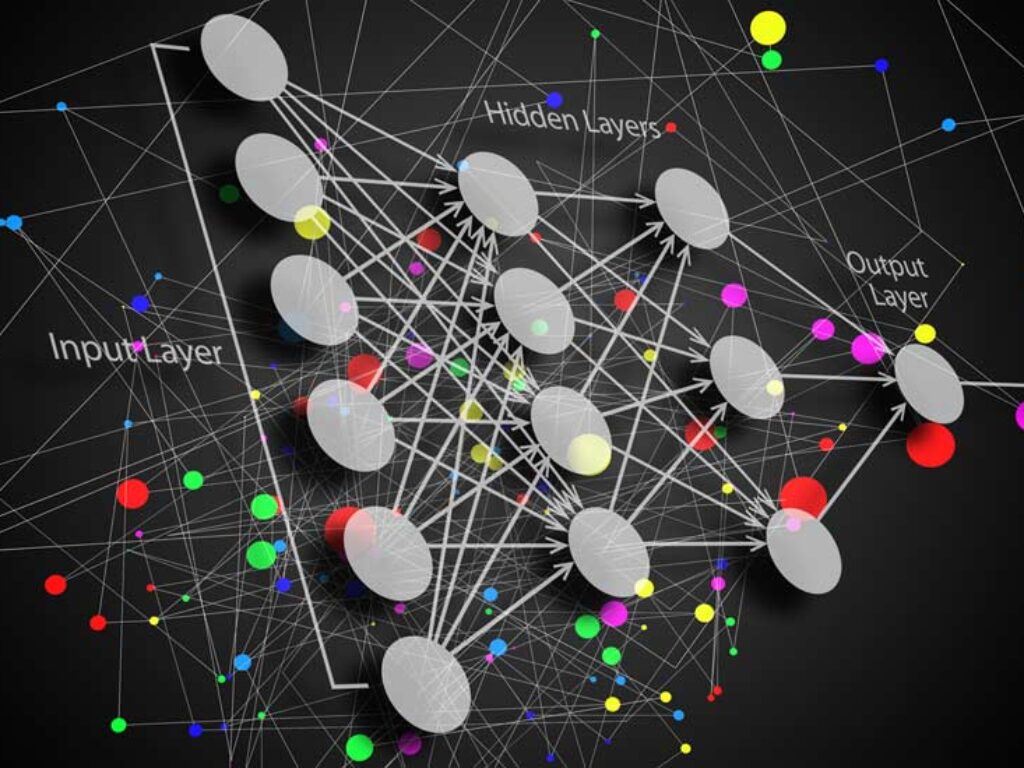

IBM’s Groundbreaking Analog AI Chip: Ushers New Era of Efficiency and Accuracy

In this contributed article blogger Justin Varghise discusses the ground breaking advancement for AI that IBM has unveiled – a cutting edge analog AI chip that promises and has potential to redefine the landscape of deep neural networks (DNNs). This chip is 100 times more energy-efficient and up to 10 times faster than traditional digital AI chips for performing deep neural network (DNN) computations.

AI for Legalese

Have you ever signed a lengthy legal contract you didn’t fully read? Or have you every read a contract you didn’t fully understand? Contract review is a time-consuming and labor-intensive process for everyone concerned — including contract attorneys. Help is on the way. IBM researchers are exploring ways for AI to make tedious tasks like contract review easier, faster, and more accurate.

Five Reasons to Attend a New Kind of Developer Event

In this special guest feature, Ubuntu Evangelist Randall Ross writes that the OpenPOWER Foundation is hosting an all-new type of developer event. “The OpenPOWER Foundation envisioned something completely different. In its quest to redefine the typical developer event the Foundation asked a simple question: What if developers at a developer event actually spent their time developing?”

SQL Engine Leads the Heard

In almost every organization, SQL is at the heart of enterprise data used in transactional systems, data warehouses, columnar databases and analytics platforms to name just a few examples. Additionally, a vast number of commercial and in-house developed tools used to access, manipulate and visualize data rely on SQL. SQL is lifeblood of the modern transaction and decision support systems.

TDWI Hadoop Readiness Guide

An organization’s readiness for Hadoop is not a single state held by a single entity. Corporations, government agencies, educational institutions, healthcare providers, and other types of organizations are complex in that they have multiple departments, lines of business, and teams for various business and technology functions. Each function can be at a different state of readiness for Hadoop, and each function can affect the success or failure of Hadoop programs.

Spectrum Scale Solution: IBM vs EMC

When considering enterprise storage software options, IT managers constantly strive to find the most efficient, scalable, and high performance solutions that solve today’s storage performance and scalability challenges, while future-proofing their investment to handle new workloads and data types. Enterprise backup solutions can be particularly vulnerable to issues stemming from poor network performance to the storage array(s), and are often not designed with the scalability demanded by rapidly changing enterprise environments.

Big Data Analytics: IBM

Businesses are discovering the huge potential of big data analytics across all dimensions of the business, from defining corporate strategy to managing customer relationships, and from improving operations to gaining competitive edge. The open source Apache Hadoop project, a software framework that enables high-performance analytics on unstructured data sets, is the centerpiece of big data solutions. Hadoop is designed to process data-intensive computational tasks, in parallel and at a scale, that previously were possible only in high-performance computing (HPC) environments.

Cloud Adoption in Your Community

In conference rooms worldwide, enterprise IT departments are evaluating entry into ‘the cloud’. Armed with media reports and marketing materials, they are considering questions like, “Is the cloud appropriate for critical workloads? Will the cloud really save time and money? Does the cloud pose a security risk?”

There’s only one problem with such due diligence: there’s no such thing as ‘the cloud’. Instead, there are multiple clouds, with different configurations, offered by different providers and representing different degrees of benefit and risk.