In this video from GTC 2019 in San Jose, Harvey Skinner, Distinguished Technologist, discusses the advent of production AI and how the HPE AI Data Node offers a building block for AI storage. Commercial enterprises have been investigating and exploring how AI can improve their business. Now they’re ready to move from investigation into production. […]

Machine Learning Experts Gather in Boston

RE•WORK will host it’s annual East Coast events on Deep Learning and the Internet of Things in Boston on 12 & 13 May. Over 300 machine learning and IoT enthusiasts and experts will come together to hear keynote presentations, panel discussions, fireside chats and to explore the startup showcase area.

Dive Into the Deep Learning Revolution at the GPU Technology Conference

Artificial Intelligence and machine learning changes everything. Join the insideAI News team at the GPU Technology Conference which will cover almost every aspect of it. Deep learning or machine learning couples the parallel processing capabilities of GPUs with the vast quantities of data unleashed by the internet — has unlocked a new generation of artificial intelligence applications. Image classification, video analytics, speech recognition, natural language processing — these are just the start.

Big Data Reliability with Lustre

Data is money for businesses—now more than ever. With Big Data analytics that means big money, and companies don’t want to risk losing their data and the value it represents. Lustre has proven its performance over the years. And, lately, companies are engaging Lustre on their HPC clusters for Big Data analytics. But, can Lustre maintain and keep a company’s data available? Does Lustre’s latest release have what it takes to take care of enterprise Big Data?

Bridging the MapReduce Skills Gap

Data is exploring at large organization. So is the adoption of Hadoop. Hadoop’s potential cost effectiveness and facility for accepting unstructured data is making it central to modern, “Big Data” architectures. Yet, a significant obstacle to Hadoop adoption has been a shortage of skilled MapReduce coders.

Lustre 101

This week’s lustre 101 article looks at the history of lustre and the typical configuration of this high-performance scalable storage solution for big data applications.

Learning to Define – Software Defined Storage

Let’s start the conversation here: If you work with big data in the cloud or deal with structured and unstructured data for analytics, you need software defined storage.

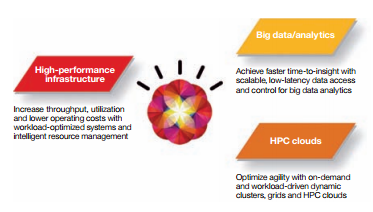

Optimizing Life Sciences – Deploying IBM Platform Computing

In your world – numbers and data can save lives. Minutes and seconds absolutely matter. Whether engaged in genome sequencing, drug design, product analysis or risk management, life sciences research teams need high-performance technical environments with the ability to process massive amounts of data and support increasingly sophisticated simulations and analyses.

Elastic Storage – The power of flash, software defined storage and data analytics

The rapid, accelerating growth of data, transactions, and digitally aware devices is straining today’s IT infrastructure. At the same time, storage costs are increasing and user expectations and cost pressures are rising. This staggering growth of data has led to the need for high-performance streaming, data access, and collaborative data sharing. So – how can elastic storage help?

insideAI News Guide to Big Data Solutions in the Cloud

For a long time, the industry’s biggest technical challenge was squeezing as many compute cycles as possible out of silicon chips so they could get on with solving the really important, and often gigantic problems in science and engineering faster than was ever thought possible. Now, by clustering computers to work together on problems, scientists are free to consider even larger and more complex real-world problems to compute, and data to analyze.